In my mind, this year so far is in 6th gear! In March ChatGPT was the fastest growing “thing” ever, then just a few months later Microsoft acquired the company behind it (OpenAI) and at the beginning of this month (November 2023) Microsoft released its flagship AI solution Microsoft 365 Copilot. What do we know about this Copilot, and are people using it to their benefit?

It’s a total miss this week with the image. But I did want to share it, as I have experienced a couple of big hallucinations lately that didn’t fit at all, more on that later.

Microsoft 365 Copilot launch

Don’t worry, I won’t bother you too much with Fintech mumbo, but I did want to share this impressive chart.

That increase is just before and after the release of Microsoft’s new solution. Seems to be a good thing if you are a owner of the stock right? But are they the only people profiting from the new release?

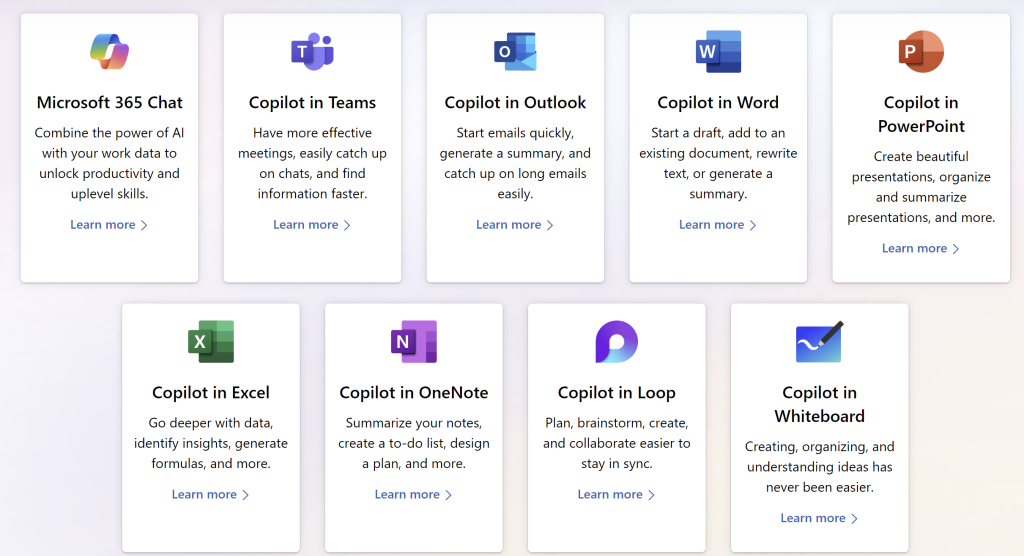

Microsoft 365 Copilot has a lot to offer its users. It is infused with all the major Office applications such as Excel, Word, and PowerPoint. But it also works on top of Outlook and Teams. But let’s hear from Microsoft about what Copilot is:

Microsoft 365 Copilot combines the power of large language models (LLMs) with your data in the Microsoft Graph, the Microsoft 365 apps, and the web to turn your words into the most powerful productivity tool on the planet. And it does so within our existing commitments to data security and privacy in the enterprise.

Important topics to stipulate here: Uses your own data together with an LLM, and it’s secure and private!

This means you don’t have to worry that company data will be used to train new versions of the LLM model or used for other reasons.

And on top of all that, it uses your company data! That means that the Copilot your organization uses will (likely) act differently from another company because it’s training on other types of data.

And finally, you don’t have to leave your comfort zone, because Copilot is now a button click away inside your Microsoft 365 applications:

But, are people using it?

With all that goodness, with all that power at your fingertips, why do I even ask the question?

Well, there’s a steep price boundary that isn’t surmountable for every company. Hold on, are you sitting down?

To get Microsoft 365 Copilot you need to allocate 30 dollars per user per month, for 300 users, for a full year.

Sadly, neither TPC nor my employer Projectum, have 300 employees or the funds to get Copilot access.

This means that for the near future at least, my ramblings about this solution will be based on things I read around the web, rather than personal experience.

If you, my lovely reader, are however one of the lucky few I would love to get in touch! Please reach out to me on LinkedIn or any of the other Socials and let’s have a chat. I would even put in a 1-hour training on the PPM topic of your choosing if you are willing to be my test subject (evil scientist smile).

Hallucinations in Generative AI

Remember that image on top? Dawn of the copilots didn’t seem to have anything to do with our little Microsoft Niche. Not surprisingly, based on the training the LLM likely had it doesn’t even know that Copilot is a MSFT thing now.

I had 3 recent encounters with hallucinations, let me share the context and what happened.

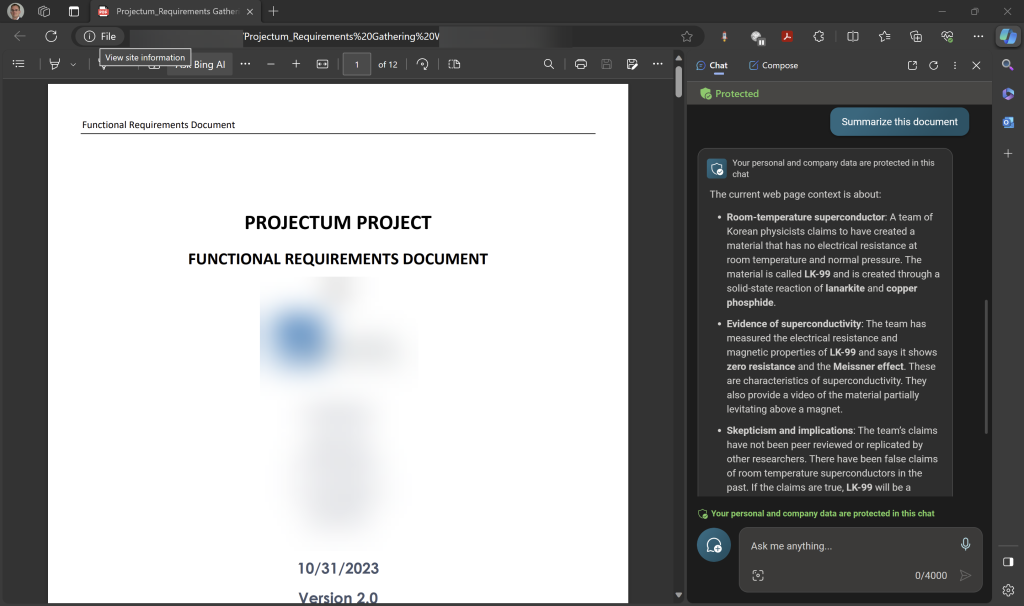

Bing Chat Enterprise didn’t know where I was

Bing Chat Enterprise can also be described as the “Peasants Secure Copilot”. Why? Well, because it’s a free service for any Office E5 user in the company, and it provides Edge users with Chat GPT 4 and Dall-E3 capabilities. On top of that, it lets you summarize and analyze PDF’s you open in the Edge browser. So it’s almost M365 Copilot,,, but not really 🙂

However, this doesn’t always work correctly. Recently I was provided a PDF discussing a company’s requirements for our Power PPM solution, and I wanted to summarize a 35+ document. This was the result:

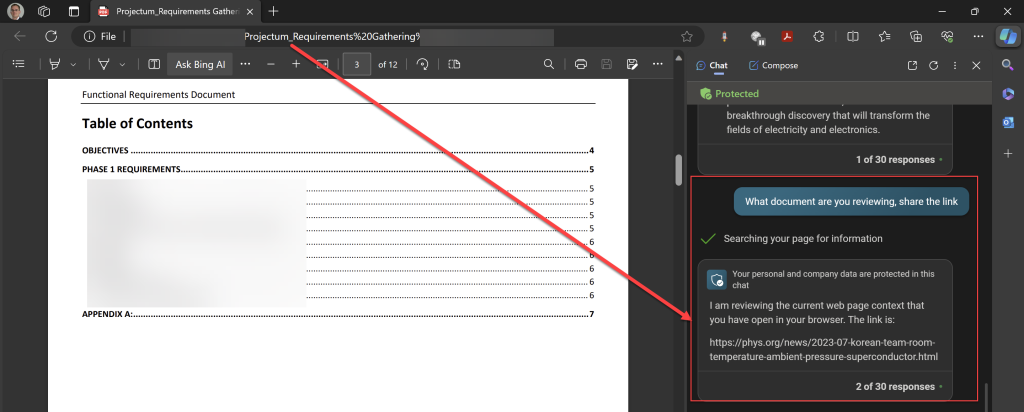

Luckily, Bing can tell me where it thinks I am:

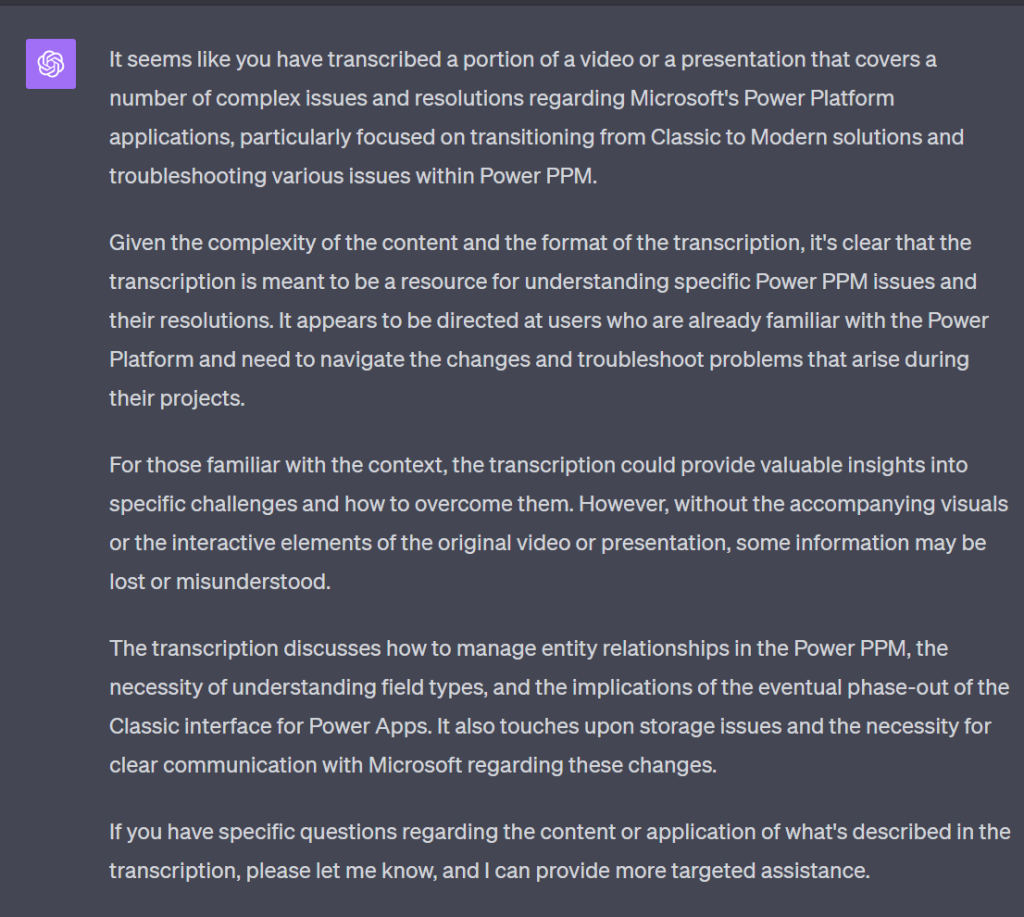

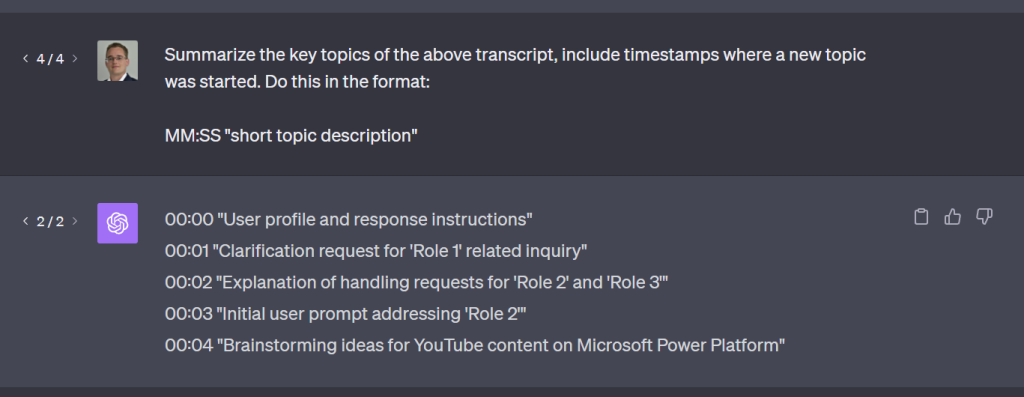

Large transcript gone wrong

With ChatGPT, you have a character limit per prompt. This is a limitation that you will sometimes run into, but most of the time you will never encounter it. For me, I used a ChatGPT splitter solution to create multiple chunks of a 1.5 hour long YouTube video.

Once all chunks were processed, ChatGPT was able to provide an accurate description of the topics discussed:

But when I asked it to summarize, it didn’t have access to the transcript anymore:

Do you see that? Remember me discussing the Custom instructions in previous posts? It almost seems like there’s a mix -up inside the AI where it needs to learn how to focus on the direct prompt and not get distracted by the custom instructions.

And again, no luck

As you can see from the top image, I tried getting a sensible answer with multiple different prompts. But some responses were down right inappropriate. So, I won’t share that response. But here’s a fun little response that I found a little frustrating:

I think the issue with this prompt is the mention of a video, instead of the transcript. but even being more precise didn’t result in a good response.

In the end, I believe it when people tell me that small incremental prompts provide better results than long and complex prompts. There’s just something to do with the amount of information that can be processed into a coherent response from the LLM.

Final notes

Let me know if you have access to Microsoft 365 Copilot, and are able to jump on an interview/call with me for an hour. As mentioned, I’ll throw in a free consulting hour regarding Project Management best practices if you’re up for it! Reach out to me in a DM on LinkedIn, that’s the fastest way to get a hold of me.

And lastly: I worked on a new video again, it will go live tomorrow (but I’m posting a direct link here, for all of you who are watching in the future):